This survey should perhaps be taken with a pinch of salt: it was commissioned by AI search and personalisation company Coveo. But as the rapidly developing technology becomes increasingly ubiquitous, the direction of travel is clear.

David Gunkel – artificial intelligence researcher and author of books including Person, Thing, Robot – would prefer to receive a “really bad gift” picked by a human than a perfect gift chosen by a large language model.

“Because the process behind it is interesting, right?” he says. “If my kid gave me a gift like that, with a story, I think that would be a really interesting conversation.”

But Gunkel, one of America’s foremost AI academics, understands the outsourcing temptation. “If you don’t know what to get your mom, if the platform is able to steer you in various directions and say, you know, ‘This is what moms are really going for’, it helps move some of the load off of your shoulders. ‘Yeah, maybe Mom would like a coffee maker’.”

According to a recent global survey by Accenture, 95% of consumers think that generative AI could help them give better gifts.

But we need to be wary, says Gunkel: The answers are going to reinforce existing biases. “Large language models are great averaging machines. They’ve been trained on all this textual data from human beings, so if you’re asking an AI ‘Christmas gifts for mom’, they don’t know what ‘mom’ or ‘Christmas gifts’ are. What they know is – when those words line up, what are the next most probable sequences of words in sentences that have already been generated in the past?”

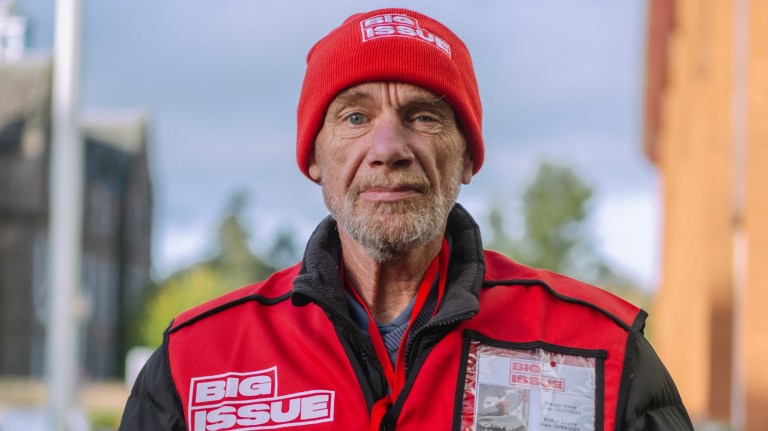

Advertising helps fund Big Issue’s mission to end poverty

As an experiment, I ask ChatGPT what to get a 26-year-old woman for Christmas. It congratulates me on my “great question” before spitting out some options: a fitness tracker, a ‘cookbook or personalised apron’, and pearl earrings.

When I ask the same question for a 26-year-old man, I am directed to buy a multi-tool, a ‘Jack Daniels steak board’ and LED outdoor lights for fishing.

“Yeah, that’s not surprising,” says Gunkel. “It’ll tell you that dad wants a drill for Christmas, and mum wants a coffee maker. While in some cases, the average is good, in other cases, the average can be skewed by various cultural expectations, by linguistic constructions of the past, by all kinds of things that are baked into the data set.”

The gendered gift guide is nothing new. If you’ve ever opened a magazine post November, you’ll have encountered a ‘top 25 best gifts for him/her’ feature. Whether you find the bath bomb by yourself, or a machine finds it for you, it’s not the end of the world, right?

But “these algorithms do a lot more than pick presents”, says Tim Davies, research director at Connected by Data, a campaign group working to give ordinary people and communities a voice in data and AI governance.

“When bias is a clear output, we should be questioning that. The bias patterns point to a broader issue with AI. It might suggest, get all women perfume. Of course, that’s not great, but it’s not new. But when that bias is moving into our machines, machines that do a lot more than pick presents, we should be questioning that.”

Advertising helps fund Big Issue’s mission to end poverty

AI and its biases are increasingly embedded in contexts where the results really, really matter. Take healthcare.

A US study published in 2021 analysed the results of a medical AI trained to detect skin cancer. It was trained mostly on images of skin lesion samples belonging to white patients, who have a higher diagnosis rate.

When tested with images of black patients, the algorithm had approximately half the diagnostic accuracy compared with what its creators had originally claimed.

“This omission should not be taken lightly, as black patients have the highest mortality rate for melanoma, with an estimated five-year survival rate of only 70%, versus 94% for white patients,”

the authors warned.

Likewise, prediction models for cardiovascular disease are trained in predominantly male datasets – meaning they are far less accurate when it comes to diagnosing women. Women are already about 50% more likely to be misdiagnosed with a heart attack, because their symptoms present in a less predictable way.

And it’s not just healthcare. In the US, judges in some states had used an algorithmically generated recidivism risk score to evaluate whether a felon is likely to reoffend. Based on these results, they decide how long to send someone to jail.

Advertising helps fund Big Issue’s mission to end poverty

But the algorithm generated racist results, says Gunkel.

“They trained the algorithm on existing historical data of recidivism within the human population. This is in the United States, and it turned out that that data was already skewed in ways that marked white people as being less risky than other members of society, and as a result, the algorithm became a racist decision-making algorithm.

“The algorithm would suggest to the judge that he or she impose stronger or more stringent restrictions on African-American individuals and Latino individuals. That was decommissioned and it is not utilised any more. But again, we didn’t know that this was happening until it actually happened, and some very vulnerable people were victimised by this algorithm.”

As AI becomes more present in hiring processes, this bias could also determine who gets a job.

Harvard Business Review estimates as many as 86% of all employers are limiting or eliminating human involvement in the early stages of recruitment. But the algorithms often discriminate simply on the basis of someone’s name, as University of Washington research published last year shows.

Researchers analysed how AI ranked 550 CVs, varying names associated with white and black men and women. The machine favoured white-associated names 85% of the time, female-associated names only 11% of the time – and it never favoured any black male-associated names over white male-associated names.

Advertising helps fund Big Issue’s mission to end poverty

“It picks out correlations just based on names,” explains Tim. “A lighter example is, you might say, I’d like a Christmas present for Joseph, my manager at work. I’m a human being with intelligence, so

I would say, well, actually, the name isn’t relevant, it’s the relationship we share that is relevant.

“But for the machine, it’s both using the correlations from Joseph and the correlations from ‘manager at work’ for its response.

“It is a statistical correlation machine, not intelligence.”

Like Gunkel, Tim would prefer a “bad present” picked by a human to the perfect present picked by AI.

“Absolutely the bad gift,” he says. “It’s the thing that’s got thought. It’s genuinely the thought that counts.”

We asked AI Santa if he has any good ideas for Christmas presents

Artificial intelligence is coming for jobs nationwide. Could the shopping centre Father Christmas be next?

Advertising helps fund Big Issue’s mission to end poverty

This year, several ‘AI Santa’ apps have been launched. The technology purports to allow you to have live dialogues with jolly Saint Nick.

Big Issue decided to try one (talktosanta.io) out – and to ask Santa (that’s 01010011 01100001 01101110 01110100 01100001, in binary code), the hard questions.

Santa, are you real?

Ho ho ho. Yes indeed, I’m as real as the joy and magic of Christmas. Now, what’s on your wish list, Lottie?

What would you get for a girl?

Ho ho ho. For a girl, I might choose delightful gifts like a beautiful doll, art supplies, or a fun book. Now, what would you like to wish for for Christmas, Lottie?

Advertising helps fund Big Issue’s mission to end poverty

What would you get for a boy?

Ho ho ho. For boys, fun gifts might include action figures, building sets or sport equipment. What would you like to add to your wish list this year?

Don’t you think that’s a bit sexist?

Ho ho ho. You make a good point, Lottie, gifts should be for everyone, no matter if they’re a boy or a girl. What would you like to wish for this year?

Is it cold in the North Pole?

Ho ho ho. It can get quite cold here in the North Pole, often below freezing. But my cosy workshop keeps me warm while I prepare gifts. Now what would you like to add to your wish list?

Advertising helps fund Big Issue’s mission to end poverty

The environmental impact of AI is accelerating the melt of arctic sea ice near your ‘home.’ Do you feel guilty?

Ho ho ho. That’s an important concern Lottie. I care deeply about our planet. I encourage everyone to spread kindness and do their part to protect the earth. Now, let’s get back to the magic of Christmas. What’s on your wish list?

I’m not very satisfied with your answers, Santa.

Ho ho ho. Oh dear. I’m sorry to hear that, Lottie. I truly want to make your holiday special. How about sharing your wish list instead? What would bring you joy this Christmas?

Can I have tickets to see Lily Allen next year?

Ho ho ho. Tickets to see Lily Allen next year. How exciting!

Advertising helps fund Big Issue’s mission to end poverty

Do you have a story to tell or opinions to share about this? Get in touch and tell us more.

Change a vendor’s life this Christmas.

Buy from your local Big Issue vendor every week – or support online with a vendor support kit or a subscription – and help people work their way out of poverty with dignity.