The news has been broadly welcomed by AI experts – but they have been equally quick to emphasise the “huge risks” of the new technology. Previous reports have warned of a potential “job apocalypse” caused by the new tech, and of major privacy concerns.

Gaia Marcus, director of the Ada Lovelace Institute, urged the government to proceed carefully.

“Just as the government is investing heavily in realising the opportunities presented by AI, it must also invest in responding to AI’s negative impacts now and in the future,” she said.

“It is critical that the government look beyond a narrow subset of extreme risks and bring forward a credible vehicle and roadmap for addressing broader AI harms.”

AI presents many opportunities, said Chaitanya Kumar, head of environment and economy at the New Economics Foundation – but its potential harms are also “limitless”.

“Government talking about spreading the benefits of this new tech across the country. All of that sounds positive,” he said.

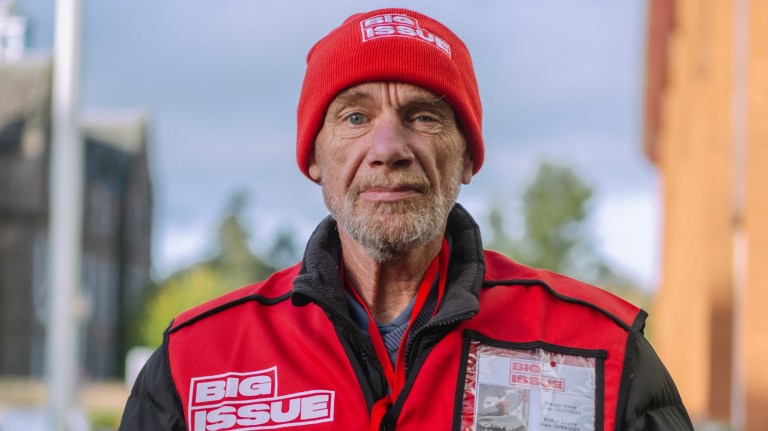

Advertising helps fund Big Issue’s mission to end poverty

“But a key question is, who will benefit from this new technology? How do we ensure it isn’t just a handful of powerful tech companies? And how do we minimise the risks when this technology is available to bad actors?”

What is in the government’s plan for AI?

The new “AI Opportunities Action plan” has three pillars.

The first – “Laying the foundations” – sets out plans to increase AI capacity in the UK. It includes plans for ‘growth zones’ where planning arrangements for data centres will be fast-tracked; the first of these will be in Oxfordshire. Further zones will be created in “de-industrialised areas of the country with access to power”.

The government will draw up billion-pound contracts with the private sector to build a new public “compute” infrastructure.

This includes plans for a new “supercomputer “with “enough AI power to play itself at chess half a million times a second”. It is a U-turn on their previous policy: last year, the government scrapped previous Tory plans to build a supercomputer, citing a lack of funding.

This investment in public capacity is welcome, said Carsten Jung, a former Bank of England economist and head of macroeconomics at the IPPR think tank.

Advertising helps fund Big Issue’s mission to end poverty

“Our previous research found that AI could either lead to eight million job losses and no GDP gains, or no job losses and GDP gains worth up to £306bn a year. The government has today made it clear that it’s understood this potential and the need to steer AI towards to a positive scenario,” he said.

“Running public AI on public computers will also be key to ensure citizens’ trust in the technology.”

The IPPR estimate that AI’s impact could be as low as zero or as high as 13% over the next decade.

Much of this will depend on the implementation of the second pillar: “Boosting adoption across public and private sectors.”

Departments are being encouraged to adopt AI pilot schemes, with a view to increasing government efficiency – for example, freeing up civil servants by doing their paperwork for them, or by spotting potholes.

Increased efficiency is a good thing, said Kumar – as long as the benefits are equitably shared.

Advertising helps fund Big Issue’s mission to end poverty

“It’s fairly clear that productivity gains and actual wage rises for workers have decoupled in the last 40 years,” he said. “Why is it that we think that this will change and everybody will naturally benefit as a result of productivity increases? The jury is very much out on that.”

Additionally, leaving public sector decisions in the hands of computers will have “real-world impacts on people,” said Gaia Marcus.

“We look forward to hearing more about how departments will be incentivised to implement these systems safely as they move at pace.”

The Big Issue has previously reported on the dangers of embedding AI in the benefits system – with one claimant alleging it brings “bias and hunger”.

The sharing of data also brings privacy concerns. The government intends to make anonymised NHS data available for “researchers and innovators” to train their AI models.

Cabinet Office minister Pat McFadden admitted that safety concerns are significant.

Advertising helps fund Big Issue’s mission to end poverty

“We’ve got to have an eye on safety as well as opportunity,” he told the BBC. “The truth is, you can’t just opt out of this. Or if you do, you’re just going to see it developed elsewhere.”

The plan will set up a National Data Library to “safely secure public data”. But it is currently very difficult for individuals to prevent their data being used, Kumar said – and hard to imagine this changing soon.

“A lot of the innovations we’ve seen so far have been basically on using data different ways. AI can see patterns in medical data that humans can’t read, for example.

“But the question becomes, what data is this, whose data is this? How do you and I as individuals, have a right to say, ‘No, I do not want my data to be used by these tools.’ Do those opportunities exist? I doubt it. Even if I really wanted to, at the moment, I wouldn’t know where to start in terms of like, do I write to all of these companies and say, do not use my data?

The environmental impact of AI is also a big concern, with data centres consuming vast amounts of energy. They also need to be constantly cooled – according to one estimate, drafting a single email with ChatGPT uses the equivalent of a bottle of water.

AI Energy Council led by energy secretary Ed Miliband which will focus on AI’s energy demands.

Advertising helps fund Big Issue’s mission to end poverty

But there is a “huge environmental problem”, said Kumar. Only renewables will be able to tackle the energy demands, he added.

“Five years ago, AI wasn’t even the equation in terms of such large energy consumption,” he said. “Now the energy demand of some data centres exceeds some small countries.”

Finally, the third pillar: “Keeping us ahead of the pack.”

“A new team will be set up to keep us at the forefront of emerging technology. This team will use the heft of the state to make the UK the best place for business. This could include guaranteeing firms access to data and energy,” Starmer said today.

But “keeping ahead of the pack” will require massively beefing up regulators, Kumar warned.

“Social media has exposed how regulators find it difficult to keep pace with technological change,” he said. “AI will only turbocharge that challenge and the government needs to be fleet footed on clamping down harmful use cases of AI as quickly as possible.”

Advertising helps fund Big Issue’s mission to end poverty

“My fear is that no government is prepared in any manner to be able to tackle such a large scale proliferation of artificial intelligence tools across the world.”

Do you have a story to tell or opinions to share about this? Get in touch and tell us more. This winter, you can make a lasting change on a vendor’s life. Buy a magazine from your local vendor in the street every week. If you can’t reach them, buy a Vendor Support Kit.