The reports come as prime minister Keir Starmer has claimed he wants to “mainline AI into the veins” of the UK.

Campaigners have explained that using automation to speed up processes within the benefits system “could be positive – if done right” but warned about the potential bias in AI systems.

Shelley Hopkinson, head of policy and influencing at Turn2us, told the Big Issue that speeding up the benefits process could have a positive impact on those “struggling to contact the DWP and facing long waits for decisions”.

“Past issues with AI-driven decision-making show how automation can introduce bias and unfairly penalise people who need support,” Hopkinson added.

“It’s crucial that people understand how these systems work and have clear routes to challenge mistakes. The government must ensure that AI works for people, not against them.”

In December, it was revealed that a machine-learning programme used by the DWP to detect benefit fraud had shown bias on factors like age, disability and nationality, The Guardian reported. An internal assessment of the programme found a “statistically significant outcome disparity” was present in its results.

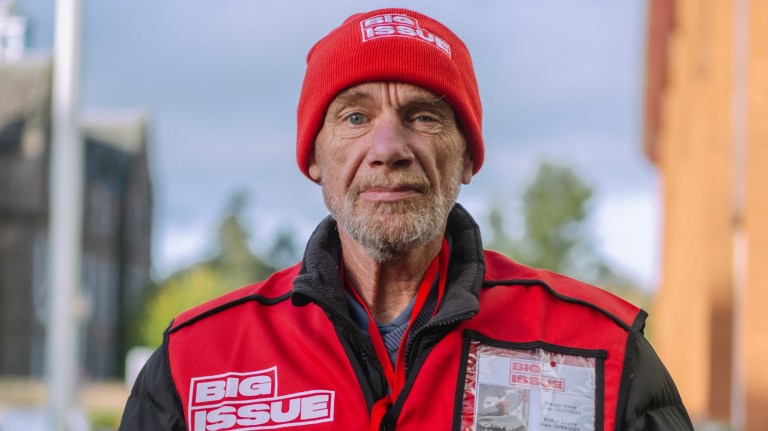

Advertising helps fund Big Issue’s mission to end poverty

Further research found that 200,000 people have been wrongly investigated for housing benefit fraud and error because of poor algorithmic judgment.

The DWP claimed the current ESA tool has a low risk of bias, as no personal details about the claimant – such as age or race – are provided to the AI model, only claimants’ medical conditions.

Jasleen Chaggar, legal and policy officer at Big Brother Watch added that the DWP “acknowledges the risk of its agents blindly following automated decisions on welfare eligibility”.

“Without a human backstop, thousands of claimants could have been wrongfully declared ineligible due to algorithmic error – and the secrecy of the system leaves them powerless to challenge it,” Chaggar explained.

She added that the “covert use of this highly inaccurate automated tool between 2020 and 2024 highlights the disquieting feeling that members of the public are being used as guinea pigs to test unreliable algorithms with life-altering consequences”.

“At the same time as the government is rolling out automated systems across public services, it is stripping away the protections that safeguard us from the errors, bias and discriminatory outcomes that invariably accompany them,” she said.

Advertising helps fund Big Issue’s mission to end poverty

“The government should reconsider its plans to water down protections on automated decision-making and fulfil its promise to publish information about the algorithms it is already using.”

AI can mean trouble for disabled people, DWP told

Mikey Erhardt, campaigner at Disability Rights UK, claimed the way AI is trained means it could contain “troubling and damaging stereotypes of disabled people and our lives”, or could even mean that benefits claimants could be falsely “flagged” for fraud.

“You cannot separate AI or an algorithm from the training data it is built on,” Erhardt explained.

“These digital social security systems, built on tonnes of data, have repeatedly been found to be biased against people based on their age, race, gender and disability, according to research on systems from Sweden and Denmark.

“The government has not made it clear what the safeguards are – and if even a small fraction of the 20 million people interacting with the benefit system are falsely flagged for fraud as a result of these opaque systems, the consequences are potentially life-changing.”

A DWP spokesperson told the Big Issue: “We are using artificial intelligence to deliver the Plan for Change needed to boost our economy and living standards, as well as rightly exploring how we can use AI to deliver the department’s services efficiently and improve customer experience.

Advertising helps fund Big Issue’s mission to end poverty

“AI does not replace human judgement – benefit award decisions are always made by a DWP caseworker and anyone who disagrees with a decision can appeal.”

Do you have a story to tell or opinions to share about this? Get in touch and tell us more. Big Issue exists to give homeless and marginalised people the opportunity to earn an income. To support our work buy a copy of the magazine or get the app from the App Store or Google Play.